Banking giant JP Morgan raised eyebrows in 2012 when it revealed that it had lost a substantial amount of money because of poorly conceived trades it had made for its own account. The losses raised questions about the adequacy of its internal controls, and broader questions about the need for regulations to reduce systemic risk to the banking system. At the heart of the matter were the transactions made by “the London Whale,” the name given to a JP Morgan’s trading operation in the City by its counterparties because of the outsized bets it was making. Until that point, JP Morgan’s Central Investment Office had been profitable and apparently well controlled. In the wake of a discovery of the large losses racked up by “the Whale,” JP Morgan launched an internal investigation into how it happened, and released the findings of the task force established to review the losses and their causes [PDF document].

One of the key points that came out of the internal investigation was the role of desktop spreadsheets in creating the mess. “The Model Review Group noted that the VaR [Value at Risk] computation was being done on spreadsheets using a manual process and it was therefore ‘error prone’ and ‘not easily scalable.’” The report also cited as an inherent operational issue the process of copying and pasting data into analytic spreadsheets “without sufficient quality control.” This form of data entry in any critical enterprise function is a hazard because the data sources themselves may not be controlled. After the fact it is impossible to positively identify the source of the data, and (unless specifically noted) its properties (such as time stamps of the source data) will also be indeterminate. These last two are sensitive issues when marking portfolios to market (that is, determining their value for periodic disclosure purposes), especially when there are thinly traded securities in that portfolio.

The report notes, “Spreadsheet-based calculations were conducted with insufficient controls and frequent formula and code changes were made.” In particular, in response to a recommendation by the Internal Audit group for providing greater clarity and documentation of how securities prices were arrived at, the individual with responsibility for implementing these changes made changes to a spreadsheet that inadvertently introduced material calculation errors, which slipped through because the changes were not subject to a vetting process. Absent a thorough audit of a spreadsheet, these sorts of errors are difficult to spot.

This was not the first time a financial institution has incurred serious losses because of faulty spreadsheets. One of the first big debacles took place more than 20 years ago when a faulty spreadsheet caused First Boston to take a multimillion-dollar hit trading collateralized debt obligations, which had only recently been invented.

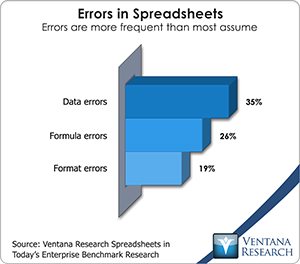

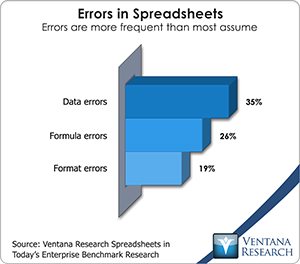

Spreadsheet mistakes are quite common. Our recent spreadsheet benchmark research  confirmed that errors in data and formulas are common in users’ most important spreadsheets. There’s even an association, The European Spreadsheet Risks Interest Group, that tracks the fallout from spreadsheet errors. Yet, even when millions of dollars or euros are at stake, the misuse of spreadsheets persists.

confirmed that errors in data and formulas are common in users’ most important spreadsheets. There’s even an association, The European Spreadsheet Risks Interest Group, that tracks the fallout from spreadsheet errors. Yet, even when millions of dollars or euros are at stake, the misuse of spreadsheets persists.

It’s a good thing that spreadsheet hazards are intangible, or they might have been banned or heavily regulated long ago in the United States by the Occupational Safety and Health Administration (OSHA) or similar bodies in other countries. All kidding aside, the London Whale incident raises the question, “Why do people persist in using desktop spreadsheets when they pose this magnitude of risk?”

Our research finds that the answer is ease of use. This is particularly true in situations like the one described by the JP Morgan task force. In the capital markets portion of the financial services industry it’s common for traders, analysts, strategists and risk managers to use desktop spreadsheets for analysis and modeling. Spreadsheets are handy for these purposes because of the fluid nature of the work these individuals do and their need to quickly translate ideas into quantifiable financial models. Often, these spreadsheets are used and maintained mainly by a single individual and undergo frequent modification to reflect changes in markets or strategies. For these reasons, more formal business intelligence tools have not been an attractive option for these users. It’s unlikely that these individuals could be persuaded to take the time to learn a new set of programming skills, and the alternative – having to communicate concepts and strategies to someone who can translate them into code – is a non-starter. Moreover, these tools can be more cumbersome to use for these purposes, especially for those who have worked for years translating their concepts into a two-dimensional grid.

Desktop spreadsheets have become a bad habit when they are used in situations where the risk of errors and their consequences are high. Increasingly, however, they are a habit that can be broken without too much discomfort. The task force recommended more controls over the spreadsheets used for portfolio valuation. One way of doing this is simply to add vetting and sign-off before a spreadsheet is used, controls to prevent unauthorized changes and periodic audits after that to confirm the soundness of the file. This classic approach, however, is less secure and more time-consuming than it needs to be. Organizations can and should use at least one of three approaches to achieve better control of the spreadsheets they use for important processes. First, tools available today can automate the process of inspecting even complex spreadsheets for suspicious formulas, broken links, cells that have a fixed value rather than a formula and other structural sources of errors. Second, server-based spreadsheets retain the familiar characteristics of desktop spreadsheets yet enable greater control over their data and formulas, especially when integrating external and internal data sources (say, using third-party feeds for securities pricing or parameters used in risk assessments). Third, multidimensional spreadsheets enable organizations to create libraries of formulas that can be easily vetted and controlled. When a formula needs updating, changing the source formula changes every instance in the file. Some applications can be linked to enterprise data sources to eliminate the risks of copy-and-paste data entry. Since they are multidimensional, it’s easy to save multiple risk scenarios to the same file for analysis.

Spreadsheets are a remarkable productivity tool, but they have limits that users must respect. Desktop spreadsheets are seductive because they are easy to set up. They are especially seductive in capital markets operations because they also are easy to modify and manipulate. However, these same qualities make it just as easy to build in errors with grave consequences that can be nearly impossible to spot.

A decade ago, there were few practical alternatives to desktop spreadsheets. Today, there are many, and therefore fewer good reasons not to find and use them. The issues uncovered by the “London Whale” episode are far from unique. Only when a disaster occurs and the fallout is made public do people see the consequences, but by then it’s too late. Executives, especially in risk management functions, must become more knowledgeable about spreadsheet alternatives so they can eliminate systemic risks in their internal operations.

Regards,

Robert Kugel

SVP Research